Understanding & Applying Research

As a sports/health consumer, understanding what makes good research is often complex. Can you rely on the results? Is the paper or study a solid work that has credibility and, more importantly, applicability?

It can be difficult to understand the research landscape in 2023. I have personally published five books and numerous studies, I have experience from all angles with this topic.

It can be difficult to understand the research landscape in 2023. I have personally published five books and numerous studies, I have experience from all angles with this topic.

When I first got into this field, research was very different. Sports Science and Kinesiology was rapidly expanding. To publish, you had to be a member of a professional society, then you had access to their journal for publication. This helped research to become more formal and set up a peer review or credibility system. This was a great approach to delve into a specialty like exercise physiology or biomechanics at that time.

The research only circulated through the members who had access to the organization and the journal. For the most part, the public never knew about these studies. Whatever equipment was needed back then was hampered by a high-cost factor in the actual equipment, so simply put, not many people could perform analyses in a respective field.

For example, the Beckman Metabolic Cart (which measured metabolic response including VO2 max), in the 1980s was priced at over $80,000. In today’s dollars, that would be $260,000. Now, you can buy a way better system for $50K. More people have good equipment than back in the early times of the sports science revolution, hence more research opportunities.

As everything in sport science and health expanded, a multi-consideration model has emerged, so the research could consider various factors, issues, and perspectives. For example, some exercise physiology studies have a lifestyle or even a psychological component in the research design.

As the internet connected researchers, students, and interested parties, there was a fundamental shift in research publication. Being a member of a society and publishing in that specific journal was gradually replaced by multi-disciplinary journals that would accept a wide variety of topics. These journals have one huge draw – open access, or anyone can read the articles or research anytime. To stay in business, these new journals charge for publication to offset editorial and staff costs. And while print publications are still out there, the tilt has been to online publication.

These online journals are peer-reviewed. An editorial board reads the research or article and evaluates its accuracy, merit, and application to the field. These journals are even better at detecting good research because the editorial board is more diverse in professional background. Everything I have published over the last ten years has gone through this process.

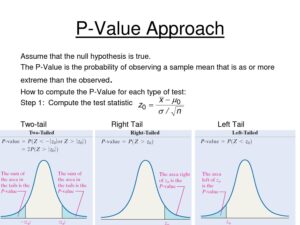

Modern, peer-reviewed journals still require validation in the form of accompanying statistics. Studies are only published with supporting statistics. That is one area the reviewer keys for their acceptance decision. In some studies, the sample size is only five subjects. It is all about how much the change was or wasn’t and the “P” value or significance. The researcher may note the effort is a beta or initial investigation, and more subjects and studies are warranted to fully validate the results and relationships.

If that statistical P value was less than 0.05, that indicates the result was likely significant. Above that, it might trend in a direction, yet the inference is less. At 0.05 or less, if the study was a good design, the chances are 95%; the results are not random. Conversely, research can be set up to select the structure and values that have positive results. A good researcher, a scientific mind, and a journal team can sift through this to see if both the study design and results were significant.

And, you can have a significant result in an area where significance and results don’t matter. For example: “People who fly kites from 5-6 p.m. were 86% less likely to get into a car crash at rush hour; significance was P= 0.0122.” However, if the subject was not on the road during rush hour, they were not likely to die in a car crash. Here significance or the magnitude of the change does not equal applicability and relevance to the human condition. That would be a meaningless use of “P.”

Another factor is the homogeny or similarity of the subjects. What about a study on the effects of relaxation on mental performance? Because IQ can range from 55 to 150, you would not compare junior high school students with rocket scientists with multiple degrees. Way different starting points. You would compare similar groups to similar groups.

In some things, the scales used are very close in differentiation, like human temperature. A few degrees here and there. However, when you get to a measurement like bench press strength compared to body weight, non-athletes/un-trained would likely average a score of 35% of their body weight. In contrast, a strength and power athlete would likely exhibit 127% or more of their body weight in a single effort. And it is almost impossible to compare one trait with high homogeny with another characteristic where the diversion from the average is enormous. Another thing to look for when you read through a research study.

What about numbers of subjects? Pharmaceutical companies often have 5,000 subjects in a study. If you say a drug works on something, it better be solid across the board to satisfy the FDA. In most of these cases, with medical research, the subjects receive pay and/or some other form of compensation. They are motivated to participate in the study. It is not abnormal for the total budget in these studies to be well over ten million dollars. In the case of research on sports science and health, drug companies do not fund those studies.

Reliance on the goodwill and interest of the subject (s) is crucial to participation in this case. Research takes time, and if someone is getting poked or prodded in some form, that means time away from their typical day. The one exception is students or athletic teams. Strongly encouraged to participate! This is one factor why much of the research uses five to 20 subjects. Remember, a small number can work if the statistics bear out the result.

Consider how this information gets to you as the consumer. There is no clearing house of reasearch. You can read it in the journal, however, in most cases you have to find it. If the research was sponsored/funded by an outside concern (a company or organization), they may use their public relations machine to promote awareness of the study. Researchers draw the line at promoting their study, it is not perceived as professional or even ethical. They will give interviews upon request.

In short, modern research can be meaningful if published in a peer-reviewed journal or at an annual meeting that uses the same criteria. Researchers should report statistics. It might be a valid conclusion if they don’t, but statistics tell the story.

There is quite a bit to what makes a good, applicable research study in sports science. Being an educated reader will help you select the studies that really mean something.

References

Batterham AM, Hopkins WG. Making meaningful inferences about magnitudes. Int. J. Sports Physiol. Perform. 2006,1, 50–57.

Beckerman H, Roebroeck M, Lankhorst G, Becher J, Bezemer PD, Verbeek A. Smallest real difference, a link between reproducibility and responsiveness. Qual. Life Res. 2001,10, 571–578.

Bishop D. An applied research model for the sport sciences. Sports Med.

2008 ,38, 253–263.

Burton PR, Gurrin LC, Campbell, MJ. Clinical significance not statistical significance: A simple Bayesian alternative to p values. J. Epidemiol. Commun. Health 1998,52, 318–323.

Cohen J. Statistical Power Analyses for the Social Sciences; Lawrence Erlbauni Associates: Hillsdale, NJ, USA, 1988.

Duthie GM, Pyne DB, Ross AA, Livingstone SG, Hooper SL. The reliability of ten-meter sprint time using different starting techniques. J. Strength Cond. Res. 2006,20, 246.

Field A, Miles J, Field Z. Discovering Statistics Using R; SAGE Publications: Thousand Oaks, CA, USA, 2012.

Greenland S, Senn SJ, Rothman KJ, Carlin JB, Poole C, Goodman, SN, Altman DG. Statistical tests, P values, confidence intervals, and power: A guide to misinterpretations. Eur. J. Epidemiol. 2016 ,31, 337–350.

Guyatt GH, Kirshner B, Jaeschke R. Measuring health status: What are the necessary measurement properties? J. Clin. Epidemiol. 1992,45, 1341–1345.

Haig BD. Tests of Statistical Significance Made Sound. Educ Psychol Meas. 2017 Jun;77(3):489-506.

Hedges L, Olkin I. Statistical Methods for Meta-Analysis; Academic Press: Orlando, FL, USA, 1985.

Hopkins WG, Hawley JA, Burke LM. Design and analysis of research on sport performance enhancement. Med. Sci. Sports Exerc. 1999,31, 472–485.

Hopkins WG. How to interpret changes in an athletic performance test. Sport Sci. 2004,8, 1–7.

Humberstone-Gough, CE, Saunders, PU, Bonetti, DL, Stephens, S, Bullock, N, Anson JM, Gore CJ. Comparison of live high: Train low altitude and intermittent hypoxic exposure. J. Sports Sci. Med.

2013,12, 394.

Lakens D. Calculating and reporting effect sizes to facilitate cumulative science: A practical primer for t-tests and ANOVAs. Front. Psychol. 2013,4, 863.

Meng XL, Rosenthal R, Rubin DB. Comparing correlated correlation coefficients. Psychol. Bull. 1992,111, 172.

Pyne DB. Interpreting the results of fitness testing. In International Science and Football Symposium; Victorian Institute of Sport Melbourne: Melbourne, Australia, 2003.

Rhea MR. Determining the magnitude of treatment effects in strength training research through the use of the effect size. J. Strength Cond. Res. 2004,18, 918–920.

Tenny S, Abdelgawad I. Statistical Significance. 2022 Nov 21. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2023 Jan–.

Tukey JW. Some thoughts on clinical trials, especially problems of multiplicity. Science 1977 ,198, 679–684.

Unnithan VB, Wilson J, Buchanan D, Timmons JA, Paton JY. Validation of the Sensormedics (S2900Z) metabolic cart for pediatric exercise testing. Can J Appl Physiol. 1994 Dec;19(4):472-9.

Welsh AH, Knight EJ. Magnitude-based Inference: A statistical review. Med. Sci. Sports Exerc. 2015.